Hiding From Google: A Real Test Case

January 24th, 2016

Lots of articles and books have been written on how to increase a website’s visibility on Google, but you won’t find so many resources talking about the opposite task: how to delete your website from any search result page.

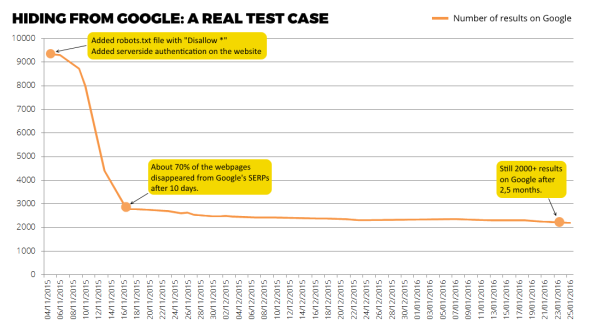

I recently had to do this job for a non-critical asset and I had fun in monitoring how much time is required for a website with about 10K indexed pages to be completely forgotten by the most popular search engine.

I started my little experiment with the following 2 actions:

- the robots.txt file has been modified to block any user agent on any page for that website;

- a server-side authentication has been added to the website’s root folder, just to be sure that Google won’t be able to access those URLs anymore.

Then I just sat down and watched my website slowly disappearing from Google’s SERPs. I am surpised about the results: after 10 days, about 70% of the original 10K pages were cleaned, but the rest is taking a lot more. Now, after 2 months and half from the beginning, I still have more than 2K indexed pages.

Note that you can speed up the process with further actions, in particular resubmitting a cleaned XML sitemap or even manually blocking URLs through Google Webmaster Tools. Anyway, it’s very interesting to see how Google handles this kind of situation.

Hi, thank you for this post I agree with you that articles and books have been written on how to increase a website’s visibility on Google, but you won’t find so many resources talking about the opposite task: how to delete your website from any search result page. very useful information