October 7th, 2016

This September, I took part to a competition promoted by Strava, the world famous sport tracker and social network for athletes, to showcase the innovation and authenticity of the developer community using their API. Of course I joined this Developer Challenge with my personal project, Toolbox For Strava.

Of the nearly 100 submissions, the Strava team created a shortlist with the top 25. Then the top 5 were selected by Ben Lowe, creator of the amazing VeloViewer; Mark Shaw and Mark Gainey, Strava’s CTO and CEO; and Ariel Poler, a member of Strava’s board of directors.

On October 5th, the winners were announced on the official Strava blog and Toolbox For Strava was awarded with the second prize! This is a great news for my project, because of the high visibility generated by the blog post and subsequent buzz on the social networks: in a couple of days, the connected users jumped from about 34K to over 40K! I will also receive a Garmin EDGE 520 (cool, I was thinking to buy it…), a jersey signed by Ted King and a free Strava Premium membership for one year.

A personal thanks to all the people supporting the project with ideas, suggestions, donations, beta-testing and encouragement: your appreciation represents for me a fuel to spend part of my (rare) free time in growing Toolbox For Strava. So, stay tuned and be ready for new features: I’m working on them!

Share:

Facebook,

Twitter,

LinkedIn

· Tags: api, awards, freebies, strava

July 29th, 2016

Defining a URL structure on an international website is a critical decision for every online business operating on a global scale and serving localized content in different countries. The chosen setup can have many impacts: on a technical perspective, it can limit (or not) the server architecture; on a user perspective, it can be more or less easy to be understood; on a SEO perspective, it can be more or less performing in driving qualified organic traffic to your website.

It’s interesting to notice that there is no universal strategy and even the biggest online brands differ in how they address this challange. Here below is a summary of the pros and cons of the different strategies, with a focus on the factors that have impacts on this choice.

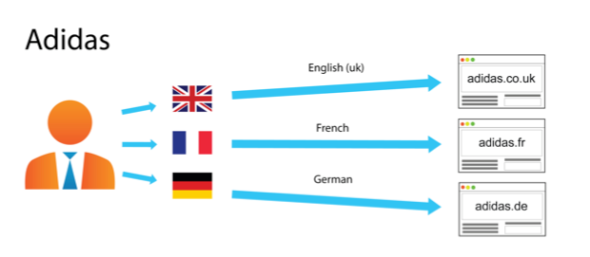

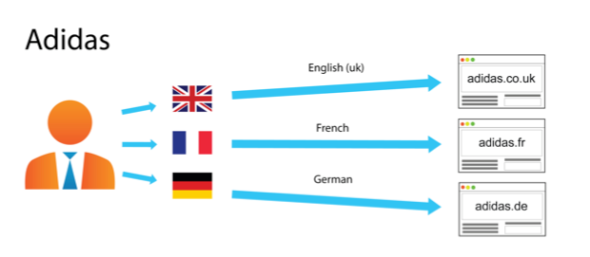

Country-Specific URL Structure Using ccTLDs

Technical perspective. This is the most expensive strategy because it requires to buy and mantain a local domain for every involved country. It can also be problematic to obtain your brand’s domain name everywhere: it may be already taken and in this case time and cost can grow dramatically. On the other hand, it is opened to different server locations and this can be very important for a big global business requiring the highest possible performance in every region.

User perspective. This is super-easy to be understood by the general Internet user. A local ccTLD generally gives the idea of a closer, faster and better service. But, on the other hand, a global brand may prefer to be known everywhere with a “.com” domain. Also, the “.com” domain is usually the main entrance for direct traffic, so you must be sure to redirect properly your users from this one (that you must own) to every local domain.

SEO perspective: since ccTLDs are the primary element that Google uses to determine a website’s targeted country, this is the easiest and more direct technique for managing optimized international URLs.

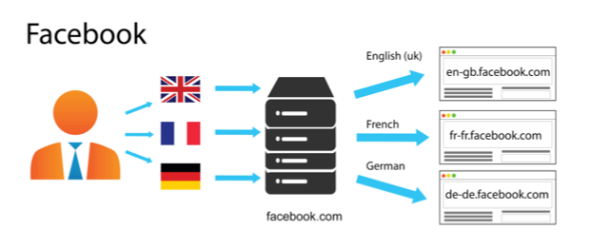

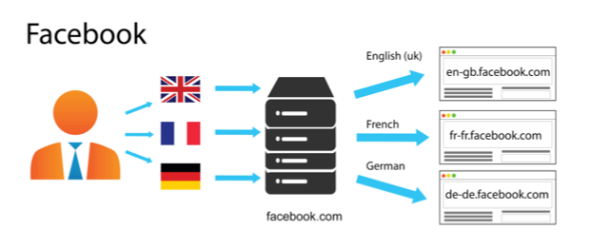

A gTLD With Country-Specific Subdomains

Technical perspective. This technique is easy to setup. It requires a certain effort for maintaining the different hosts, but it is not expensive and like the previous one is opened to a decentralized architecture with different server locations.

User perspective. Definitely not the most clear and immediate choice for your users. The well-known “www.yourname.com” structure is not preserved in its integrity.

SEO perspective. Since it does not use separate ccTLDs, other actions are required to let Google know the correct audience of your pages (e.g., Search Console geotargeting and href-lang metatags).

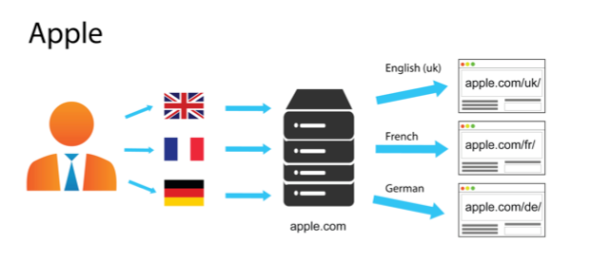

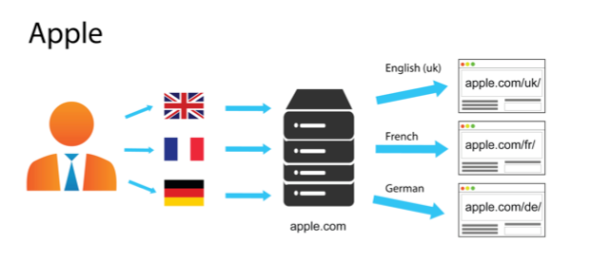

A gTLD With Country-Specific Subdirectories

Technical perspective. Very easy to setup, this technicque also requires low maintenance with the same host on all the network. On the other hand, it is not the right solution if you need to manage separate server locations (or if you are planning to do it in the future).

User perspective. It is quite clear for the user and it’s probably the best choice for a global brand that wants to be known and visible everywhere with a clear “www.yourname.com” domain.

SEO perspective. Same considerations of the previous technicque.

Querystring Parameters For Country Localization

This tecnhnique is not recommended. On a user perspective, localization is not easy recongnizable. But the most important cons are related to SEO: querystring parameters are often ignored by search engines and at the moment, if your URLs are built this way, it’s not possible to use the Google Search Console for geotargeting. Then, why Twitter is using this technique? I can imagine that they decided to do it when SEO was not so important for them.

What does Google use for geotargeting a page?

Google generally uses the following signals to define a website’s targeted country and language, in order of priority:

- Country-code top-level domain names (ccTLDs). Websites with a local ccTLD are generally treated as local content by Google. But pay attention to some so-called “vanity ccTLDs” (such as .tv, .me, etc.): they are treated as generic and they do not have the same local value in terms of SEO. See a full list of domains Google treats as generic. Also note that regional top-level domains such as .eu or .asia are also treated by Google as generic top-level domains.

- Geotargeting settings. If your site has a generic top-level domain name you can use the geotargeting settings in Google Search Console to indicate to Google the targeted country of your website.

- Server location (through the IP address of the server). The server location can be used as a signal about your site’s targeted audience. Anyway, Google is aware that many websites use distributed CDNs or are hosted in countries offering a better infrastructure, so it is not a definitive signal.

- Other signals. Pay attention to the local addresses and phone numbers on the pages, to the currency (especially for online stores), to links from other local sites: they are all used by Google to determine your website’s country and language localization. Also, if your website has any kind of physical touchpoint, be sure to use Google My Business properly.

My notes from a real case

In 2015 we choosed the third option when planning the final domain migration for KIKO Milano’s e-commerce network, that reached a global scale with the opening of the US website: previously, we had a specific ccTLD for every country, but that was unsatisfactory for technical, legal and branding reasons. So we moved everything under a “.com” domain, using subfolders for managing the different countries (and languages). In this case, I recommend the following actions (that we did):

- Use an IP detection engine. We placed it on every page, but it’s important at least on your default “country selection” home page so you can redirect your users to the most proper subfolder, corresponding to the country they are browsing from (plus the language of their browser).

- Do your homework on Google Search Console. Remember to add every website to the Google Search Console (previously known as Google Webmaster Tools) and add the geotargeting settings for each one of them, in order to get more qualified traffic from Google.

- Try to own your brand everywhere. Even if we moved KIKO on a global “.com” domain, we always try to own our local branded domain in every country which is relevant for the business and redirect it to our website. This is a typical brand protection activity, but it is also important for maximizing direct traffic without losing any of those users that are looking for your website via try-and-guess in the URL bar of their browser.

Share:

Facebook,

Twitter,

LinkedIn

· Tags: digital marketing, ecommerce, google, seo

June 12th, 2016

I recently took part to a 2-days class held by SEO legend Bruce Clay, president of Bruce Clay, Inc. and pioneer of SEO since 1996. Here are some quick notes on the status of SEO in 2016 and other interesting sub-topics covered during the class.

A photo with Bruce Clay and Ale Agostini

- Google is in the business of making money. Bruce repeated this “mantra” several times. It seems obvious, but we have to remember that Google wants you to pay for getting high posintions on its SERPs. This explains the recent changes in the layout of Google’s SERPs with increased visibility for paid search results, especially on mobile. And this also explains why Google does not like giving too much information that can help people to increase organic traffic.

- 58% of people do not recognize paid search as advertising.

- 55% of all search queries are 4 or more words.

- 1-word queries get a lot of impressions but fewer clicks. This suggest that a common behaviour is to try a simple 1-word query, then refine it with a more complex one, as the results are often unsatisfactory.

- 20% of searches each day are new or haven’t been completed in the past 6 months.

- 70% of queries have no exact-matched keyword. This is of course a great opportunity for SEO.

- Increased visibility for news content. If your page is recognized as a news for a specific topic, it can gain a very high visibility on top of Google’s SERPs, bringing you important peaks of traffic (but only for a limited time).

- Local SEO is critical. Map and local results get a boosted visibility on Google, espcially on mobile. It is critical to presidiate Google My Business (former Google Places) and other local directories with name, address and phone number for any touchpoint you have in the real world.

- Top three in SEO, Local and PPC is what will get 90% of all traffic within 2 years. Relying on organic traffic alone is not an option in 2016, with local rankings and PPC growing and consuming a lot of SERP space, especially on mobile (which is also growing a lot).

- Click-thorugh rate by position is not linear on mobile. Of course everyone knows that the higher you rank on a SERP, the most click you get. This is actually not so true on mobile, where position #4 gets a higher CTR than position #3 due to page scroll.

- Content optimization, SEO-related technicalities and link building are equally important in a SEO strategy. This is a specific question I made to Bruce during a break in order to understand if we should prioritize something among those three “SEO macro-factors”. The answer is that we should consider them all priority-1 items.

- The top ranking goes to the E-A-T websites. Where “E” stands for Expertise, “A” for Authoritativeness and “T” for Thrustworthiness. So it’s very important to offer fresh, original and complete content, and to demonstrate an optimal maintenance level on a more thechnical perspective. “Stale” is a sign of a poorly maintained website. The Google Quality Rating Guidelines (Nov. 2015) can be considered as a detailed reference.

- Different people get different results for the same query. Google SERPs are getting always more and more personal, taking into account many factors such as search history, behavior, intent and localization.

- The intent of a website is defined by how its pages appear to the eye of Google. An e-commerce page should be shorter, with fewer words (300 to 400), more and smaller images, more links and fast load. Instead, a website with a focus on research intent should have longer pages, with more than 500 words, less images (but bigger), less links and relatively slower load

- Google is increasing rank of short pages. Until some time ago, 1000 words were the minimum limit for creating SEO-relevant content. Now that limit decreased to 500 words more or less.

- 302 redirects can cause problems and should always be avoided. Just take note of this.

- Words on images are now recognized by Google. If you scan a newspaper article, put it on a PDF and make it visible to Google, you will get the text indexed.

- The title tag should be first in the <head> section, be unique for every page and be 6-12 words and 62-70 characters to avoid ellipsis.

- The meta description tag should be second in the <head> of a page, should be around 18 words and should use a word for a maximum of 2 times.

- Google never really said that the keywords metatag is not considered! So we should continue including it in the <head> of our pages. It should include around 36 words.

- Never purchase links. Advertisment are a valid exception, but you should add a “nofollow” attribute to them.

- The most important SEO technique is: your pages must not be considered as SPAM by Google. This leads to very long penalties and brought companies to failure.

- You should care about the quality of inbound links. Removing low-quality links improves ranking. Link from poor trust or inorganic topic sites will harm your ranking.

- Accelerated Mobile Pages (AMP) are not strongly recommended for now. They are time-consuming in terms of technical setup and they add limits in terms of layout and advertising options. But we should keep an eye on this project.

Share:

Facebook,

Twitter,

LinkedIn

· Tags: bruce clay, digital marketing, google, seo